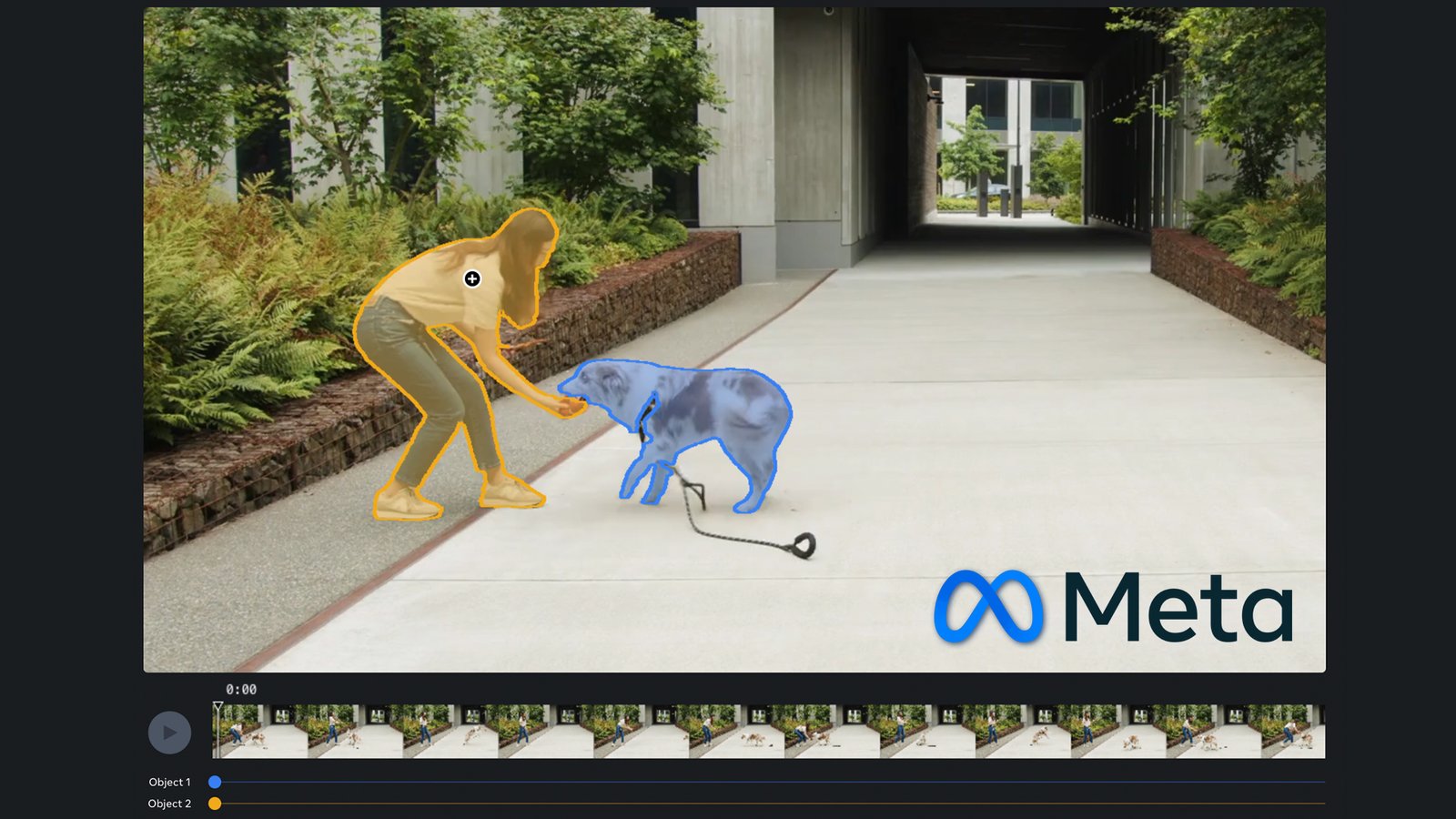

Meta has lately launched SAM 2. Constructing on the takeaways of its predecessor, the brand new AI mannequin is able to segmenting varied objects in photos and movies and monitoring them virtually in actual time. This subsequent technology of Phase Something Mannequin (or SAM) has a lot of potential purposes, from merchandise identification in AR to quick and easy inventive results in video modifying. SAM 2 by Meta is an open-source venture and can also be accessible as a web-based demo for testing. So, what are we ready for? Let’s strive it out!When you use Instagram (which additionally belongs to the Meta household), then you’re in all probability conscious of stickers that customers can create from any picture and movie with only one click on. This function, referred to as “Backdrop and Cutouts,” makes use of Meta’s Phase Something Mannequin (SAM), which was launched roughly a 12 months in the past. Since then, the corporate’s researchers have gone additional and determined to develop an analogous device for shifting photos. Wanting on the first exams: Nicely, they certainly succeeded.SAM 2 by Meta and its craftSAM 2 is a unified mannequin for real-time promptable object segmentation in photos and movies that achieves state-of-the-art efficiency.A quote from the SAM 2 launch paperAccording to Meta, SAM 2 can detect and phase any desired object in any video or picture, even for visuals that this AI mannequin hasn’t seen beforehand and wasn’t skilled on. Builders primarily based their method on profitable picture segmentation and regarded every picture to be a really brief video with a single body. Adopting this angle, they have been in a position to create a unified mannequin that helps each picture and video enter. (If you wish to learn extra in regards to the technical aspect of SAM 2 coaching, please head over to the official analysis paper).A screenshot from the SAM 2 web-based demo interface. Picture supply: MetaSeasoned video editors would in all probability shrug their shoulders: DaVinci Resolve achieves the identical with their AI-enhanced “Magic Masks” for what already looks as if ages. Nicely, a giant distinction right here is that Meta’s method is, on the one hand, open-source and, then again, user-friendly for non-professionals. It can be utilized to extra sophisticated instances, and we are going to check out some beneath.Open-source approachAs acknowledged within the launch announcement, Meta wish to proceed their method to open science. That’s why they’re sharing the code and mannequin weights for SAM 2 with a permissive Apache 2.0 license. Anybody can obtain them right here and use them for custom-made purposes and experiences. You can even entry your entire SA-V dataset, which was used to coach Meta’s Phase Something Mannequin 2. It consists of round 51,000 real-world movies and greater than 600,000 spatio-temporal masks.Tips on how to use SAM 2 by MetaFor these of us who haven’t any expertise in AI mannequin coaching (myself included), Meta additionally launched an intuitive and user-friendly web-based demo that anybody can check out at no cost and with out registration. Simply head over right here, and let’s begin.Step one is to decide on the video you’d like to change or improve. You may both choose one of many Meta demo clips accessible within the library or add your individual. (This selection will seem while you click on “Change video” within the decrease left nook). Now, choose any object by merely clicking on it. When you proceed clicking on the totally different areas of the video, they are going to be added to your choice. Alternatively, you’ll be able to select “Add one other object”. My exams confirmed that the latter method brings you higher leads to instances when your objects transfer individually – as an illustration, the canine and the woman within the instance beneath.Picture supply: a screenshot from the demo check of SAM 2 by MetaAfter you click on “Monitor objects,” SAM 2 by Meta will take mere seconds and present you the preview. I need to admit that my consequence was virtually good. The AI mannequin solely missed the canine’s lead and the ball for a few frames.By urgent the “Subsequent” button, the web site will allow you to apply a number of the demo results from the library. It may very well be easy overlays over objects or blurring the weather that weren’t chosen. I went with desaturating the background and including extra distinction to it.Picture supply: SAM 2 by MetaWell, what can I say? After all, the consequence is just not 100% detail-precise, so we can’t evaluate it to frame-by-frame masking by hand. But for the mere seconds that the AI mannequin wanted, it’s actually spectacular. Would you agree?Attainable areas of utility for SAM 2 by MetaAs Meta’s builders point out, there are numerous areas of utility for SAM 2 sooner or later, from aiding in scientific analysis (like segmenting shifting cells in movies captured from a microscope) to figuring out on a regular basis gadgets by way of AR glasses and including tags and/or directions to them.Picture supply: MetaFor filmmakers and video creators, SAM 2 might turn into a quick answer for easy results, akin to masking out disturbing parts, eradicating backgrounds, including tracked-in infographics to things, outlining parts, and so forth. I’m fairly certain we received’t wait lengthy to see these choices in Instagram tales and reels. And to be trustworthy, this can immensely simplify and speed up modifying workflows for impartial creators.One other potential utility of SAM 2 by Meta is so as to add controllability to generative video fashions. As you recognize, for now, you can not actually ask a text-to-video generator to alternate a specific factor or delete the background. Think about although, if it could turn into potential. For the sake of the experiment, I fed SAM 2 a clip generated by Runway Gen-2 roughly a 12 months in the past. This one:Then, I chosen each animated characters, turned them into black cutouts, and blurred the remainder of the body. This was the consequence: LimitationsOf course, like every mannequin within the analysis part, SAM 2 has its limitations, and builders acknowledge them and promise to work on the options. These are:Generally, the mannequin might lose observe of objects. This happens particularly usually throughout drastic digicam viewpoint adjustments, in crowded scenes with many parts, or in prolonged movies. Say, when the canine within the check video above leaves the body after which re-enters it, it’s not an issue. But when it have been a wild flock of stray canine, SAM 2 would doubtless lose the chosen one.SAM 2 predictions can miss fantastic particulars in fast-moving objects.The outcomes you could have obtained with SAM 2 to date are thought of analysis demos and might not be used for business functions.The net-based demo is just not open to these accessing it from Illinois or Texas in the united statesYou can strive it out proper nowAs talked about above, SAM 2 by Meta is on the market each as an open-source code and as a web-based demo, which anybody can check out right here. You don’t want registration for it, and the check platform permits saving the created movies or sharing the hyperlinks.What’s your tackle Phase Something Mannequin 2? Do you see it as a speedy enchancment for video creators and editors, or does it make you frightened? Why? What different areas of purposes are you able to think about for this piece of tech? Let’s speak within the feedback beneath!Function picture supply: a screenshot from the demo check of SAM 2 by Meta.