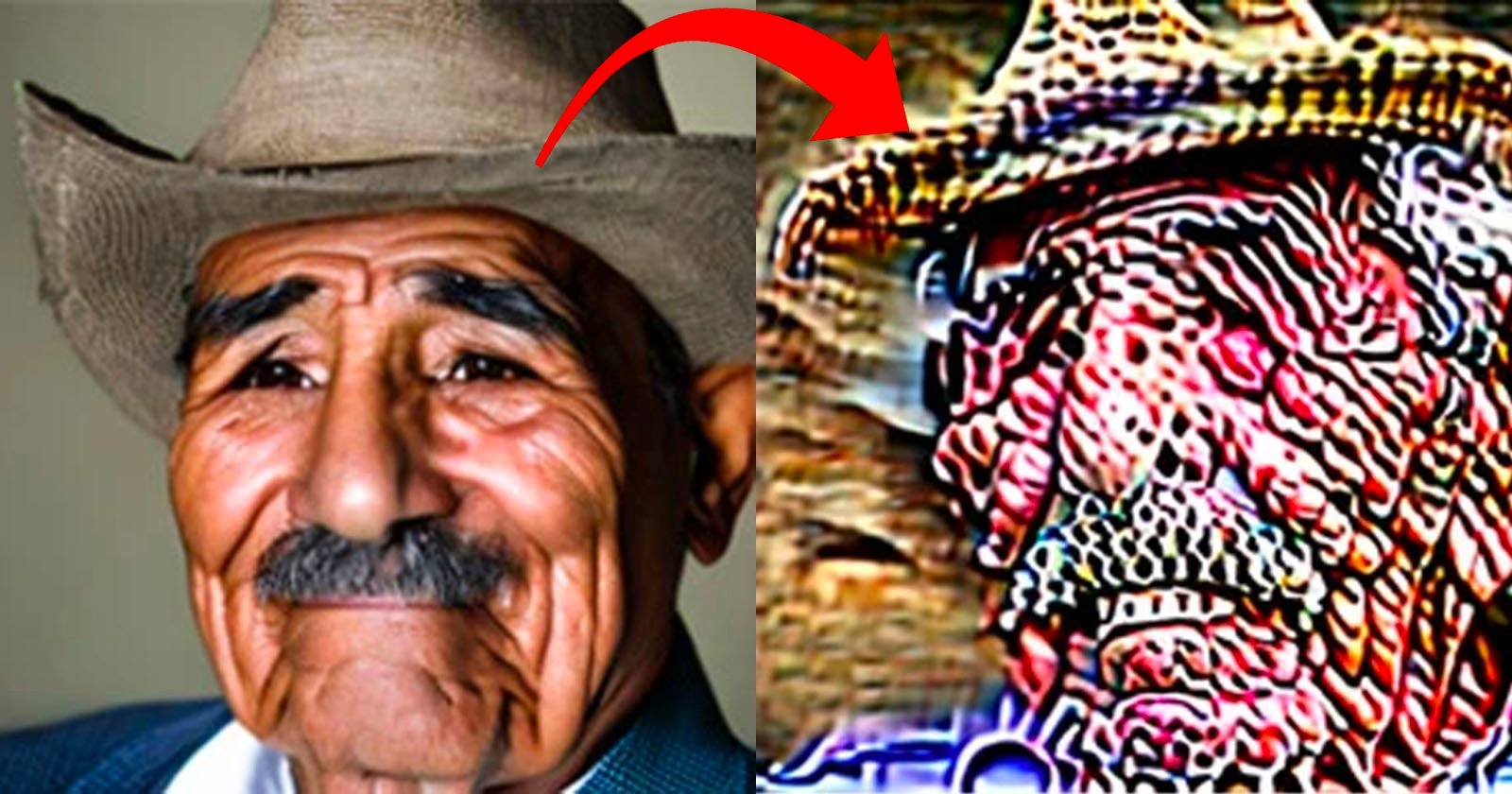

If generative AI fashions are to proceed to broaden, they are going to want high-quality, human-created coaching information say scientists who discovered that utilizing AI content material corrupts the output. Researchers from the College of Cambridge found {that a} cannibalistic method to AI coaching information rapidly results in the fashions churning out nonsense and will show to be a fork within the street for the speedy expanse of AI. The workforce used mathematical evaluation to point out the issue that impacts large-language fashions (LLMs) like ChatGPT in addition to AI picture turbines like Midjourney and DALL-E. The examine was revealed in Nature which gave the instance of constructing an LLM to create Wikipedia-like articles. The more and more distorted photos produced by an AI picture mannequin that’s skilled on information generated by a earlier model of the mannequin. | M. Boháček & H. Farid/arXiv (CC BY 4.0) The researchers say that it saved coaching new iterations of the mannequin on textual content produced by its predecessor. Because the artificial information polluted the coaching set, the mannequin’s output grew to become nonsensical.

For instance, in an article about English church towers, the mannequin included intensive particulars about jackrabbits. Though that instance is on the excessive finish, any AI information could cause fashions to malfunction. “The message is, we’ve got to be very cautious about what leads to our coaching information,” says co-author Zakhar Shumaylov, an AI researcher on the College of Cambridge, UK. “In any other case, issues will all the time, in all probability, go unsuitable”. The workforce tells Nature they have been stunned by how briskly issues began going unsuitable when utilizing AI-generated content material as coaching information. Hany Farid, a pc scientist on the College of California, Berkeley, who has demonstrated the identical impact in picture fashions, compares the issue to species inbreeding. “If a species inbreeds with their very own offspring and doesn’t diversify their gene pool, it might result in a collapse of the species,” says Farid. Shumaylov predicts that this technological quirk implies that the price of constructing AI fashions will enhance as the worth of high quality information will increase. One other concern for AI firms is that because the open internet — the place most scrape information from — is stuffed up with AI content material it’ll pollute their useful resource forcing them to rethink.

Nonetheless, few copyright holders will shed a tear at this downside confronted by AI firms. Artists, photographers, and content material creators of all stripes have been up in arms over the tech trade’s brazen use of their work to construct AI fashions. Picture credit: M. Boháček & H. Farid/arXiv (CC BY 4.0)